Prof. Jan Bosch

Few technologies create a level of hype, excitement and fear these days as artificial intelligence (AI). The uninitiated believe that general AI is around the corner and worry that Skynet will take over soon. Even among those that understand the technology, there’s amazement and excitement about the things we’re able to do now and lots of prediction about what might happen next.

'Rolling out an ML/DL model remains a significant engineering challenge'

The reality is, of course, much less pretty as the beliefs we all walk around with. Not because the technology doesn’t work, as it does in several or even many cases, but because rolling out a machine learning (ML) or deep learning (DL) model in production-quality, industry-strength deployments remains a significant engineering challenge. Companies such as Peltarion help address some of these and do a great job at it.

Taking an end-to-end perspective, in our research we’ve developed an agenda that aims to provide a comprehensive overview of the topics that need to be addressed when transitioning from the experimentation and prototyping stage to deployment. This agenda is based on more than 15 case studies we’ve been involved with and over 40 problems and challenges we’ve identified.

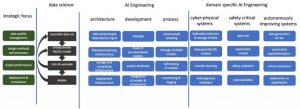

The AI Engineering research agenda developed in Software Center

The research agenda follows the typical four-stage data science process of getting the data, creating and evolving the model, training and evaluating and then deployment. For generic AI engineering, we identify, for each of the stages, the primary research challenge related to architecture, development and process. These challenges are mostly concerned with properly managing data, federated solutions, ensuring the various quality attributes, integrating ML/DL models in the rest of the system, monitoring during operations and infrastructure.

In addition to the generic AI engineering challenges, we recognize that different domains have their own unique challenges. We identify the key challenges for cyber-physical, safety-critical and autonomously improving systems. For cyber-physical systems, as one would expect, they’re concerned with managing many instances of a system deployed out in the field at customers. For safety-critical systems, explainability, reproducibility and validation are key concerns. Finally, autonomously improving systems require the ability to monitor and observe their own behavior, generate alternative solutions for experimentation and balance exploration versus exploitation.

Concluding, building and deploying production-quality, industry-strength ML/DL systems require AI engineering as a discipline. I’ve outlined what we, in our research group, believe are the key research challenges that need to be addressed to allow more companies to transition from experimentation and prototyping to real-world deployment. This post is just a high-level summary of the work we’re engaged in in Software Center, but you can watch and read or contact me if you want to learn more.